Download Vsphere Client 6.7

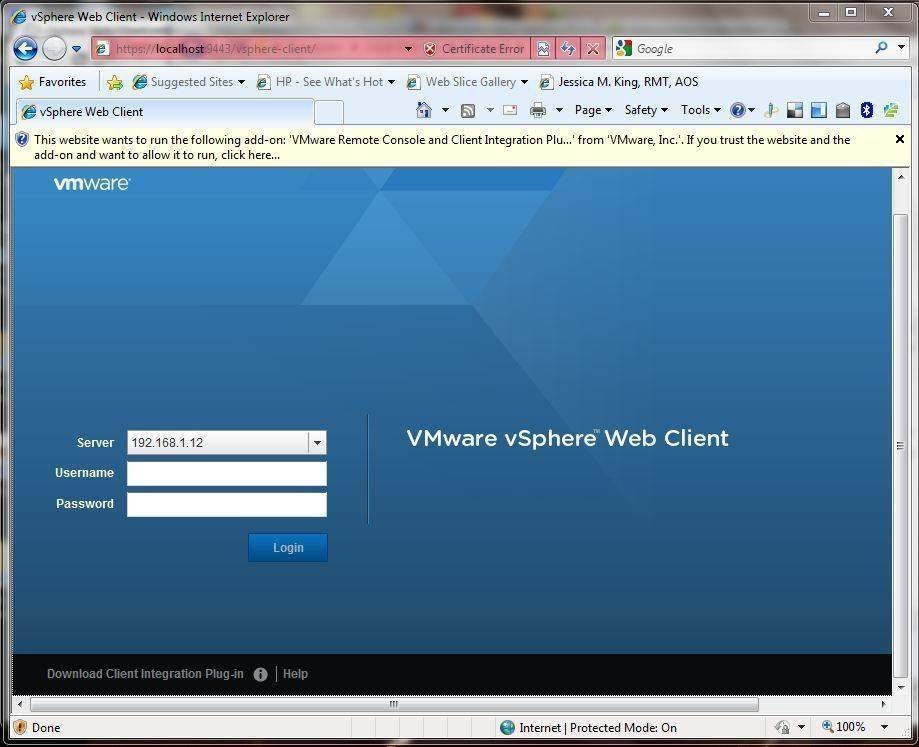

Oct 31, 2017 The collection of all those links are now centralized to a single VMware vSphere Client Download Page. It’s already on since some time, but I haven’t seen any other bloggers writing about that. So, if you are not aware, there is a VMware KB which lists all versions of available VMware vSphere Clients starting with version 5.0. VMware's release of vSphere 6.7 U1 on Oct. 16, 2018, despite being only an update, was a major event as this version includes a near-feature-complete vSphere Client. For years, VMware has been slowly replacing its Flash-based vSphere Web Client with the HTML5-based vSphere Client. Hi, Really sorry for what seems like an easy question but I am struggling to find the vSphere 6.7 Client installer? I have just upgraded my ESXi host from 5.5 to 6.7 and my client no longer works.

Updated on: 17 APR 2018 ESXi 6.7 17 APR 2018 ISO Build 8169922 vCenter Server 6.7 17 APR 2018 ISO Build 8217866 vCenter Server Appliance 6.7 17 APR 2018 Build 8217866 Check for additions and updates to these release notes. |

What's in the Release Notes

The release notes cover the following topics:

What's New

This release of VMware vSphere 6.7 includes ESXi 6.7 and vCenter Server 6.7. Read about the new and enhanced features in this release in What's New in VMware vSphere 6.7.

Internationalization

VMware vSphere 6.7 is available in the following languages:

- English

- French

- German

- Spanish

- Japanese

- Korean

- Simplified Chinese

- Traditional Chinese

Components of vSphere 6.7, including vCenter Server, vCenter Server Appliance, ESXi, the vSphere Client, the vSphere Web Client, and the VMware Host Client, do not accept non-ASCII input.

Compatibility

ESXi and vCenter Server Version Compatibility

The VMware Product Interoperability Matrix provides details about the compatibility of current and earlier versions of VMware vSphere components, including ESXi, VMware vCenter Server, and optional VMware products. Check the VMware Product Interoperability Matrix also for information about supported management and backup agents before you install ESXi or vCenter Server.

The vSphere Update Manager, vSphere Client, and vSphere Web Client are packaged with vCenter Server.

Hardware Compatibility for ESXi

To view a list of processors, storage devices, SAN arrays, and I/O devices that are compatible with vSphere 6.7, use the ESXi 6.7 information in the VMware Compatibility Guide.

Device Compatibility for ESXi

To determine which devices are compatible with ESXi 6.7, use the ESXi 6.7 information in the VMware Compatibility Guide.

Guest Operating System Compatibility for ESXi

To determine which guest operating systems are compatible with vSphere 6.7, use the ESXi 6.7 information in the VMware Compatibility Guide.

Virtual Machine Compatibility for ESXi

Virtual machines that are compatible with ESX 3.x and later (hardware version 4) are supported with ESXi 6.7. Virtual machines that are compatible with ESX 2.x and later (hardware version 3) are not supported. To use such virtual machines on ESXi 6.7, upgrade the virtual machine compatibility. See the ESXi Upgrade documentation.

Installation and Upgrades for This Release

Installation Notes for This Release

Read the ESXi Installation and Setup and the vCenter Server Installation and Setupdocumentation for guidance about installing and configuring ESXi and vCenter Server.

Although the installations are straightforward, several subsequent configuration steps are essential. Read the following documentation:

- 'License Management and Reporting' in the vCenter Server and Host Management documentation

- 'Networking' in the vSphere Networking documentation

- 'Security' in the vSphere Security documentation for information on firewall ports

VMware introduces a new Configuration Maximums tool to help you plan your vSphere deployments. Use this tool to view VMware-recommended limits for virtual machines, ESXi, vCenter Server, vSAN, networking, and so on. You can also compare limits for two or more product releases. The VMware Configuration Maximums tool is best viewed on larger format devices such as desktops and laptops.

VMware Tools Bundling Changes in ESXi 6.7

In ESXi 6.7, a subset of VMware Tools 10.2 ISO images are bundled with the ESXi 6.7 host.

The following VMware Tools 10.2 ISO images are bundled with ESXi:

windows.iso: VMware Tools image for Windows Vista or higherlinux.iso: VMware Tools image for Linux OS with glibc 2.5 or higher

The following VMware Tools 10.2 ISO images are available for download:

solaris.iso: VMware Tools image for Solarisfreebsd.iso: VMware Tools image for FreeBSDdarwin.iso: VMware Tools image for OSX

Follow the procedures listed in the following documents to download VMware Tools for platforms not bundled with ESXi:

Migrating Third-Party Solutions

For information about upgrading with third-party customizations, see the ESXi Upgrade documentation. For information about using Image Builder to make a custom ISO, see the ESXi Installation and Setup documentation.

Upgrades and Installations Disallowed for Unsupported CPUs

Comparing the processors supported by vSphere 6.5, vSphere 6.7 no longer supports the following processors:

- AMD Opteron 13xx Series

- AMD Opteron 23xx Series

- AMD Opteron 24xx Series

- AMD Opteron 41xx Series

- AMD Opteron 61xx Series

- AMD Opteron 83xx Series

- AMD Opteron 84xx Series

- Intel Core i7-620LE Processor

- Intel i3/i5 Clarkdale Series

- Intel Xeon 31xx Series

- Intel Xeon 33xx Series

- Intel Xeon 34xx Clarkdale Series

- Intel Xeon 34xx Lynnfield Series

- Intel Xeon 35xx Series

- Intel Xeon 36xx Series

- Intel Xeon 52xx Series

- Intel Xeon 54xx Series

- Intel Xeon 55xx Series

- Intel Xeon 56xx Series

- Intel Xeon 65xx Series

- Intel Xeon 74xx Series

- Intel Xeon 75xx Series

During an installation or upgrade, the installer checks the compatibility of the host CPU with vSphere 6.7. If your host hardware is not compatible, a purple screen appears with an incompatibility information message, and the vSphere 6.7 installation process stops.

The following CPUs are supported in the vSphere 6.7 release, but they may not be supported in future vSphere releases. Please plan accordingly:

- Intel Xeon E3-1200 (SNB-DT)

- Intel Xeon E7-2800/4800/8800 (WSM-EX)

Upgrade Notes for This Release

For instructions about upgrading ESXi hosts and vCenter Server, see the ESXi Upgrade and the vCenter Server Upgradedocumentation.

Open Source Components for vSphere 6.7

The copyright statements and licenses applicable to the open source software components distributed in vSphere 6.7 are available at http://www.vmware.com. You need to log in to your My VMware account. Then, from the Downloads menu, select vSphere. On the Open Source tab, you can also download the source files for any GPL, LGPL, or other similar licenses that require the source code or modifications to source code to be made available for the most recent available release of vSphere.

Product Support Notices

The vSphere 6.7 release is the final release of vCenter Server for Windows. After this release, vCenter Server for Windows will not be available. For more information, see Farewell, vCenter Server for Windows.

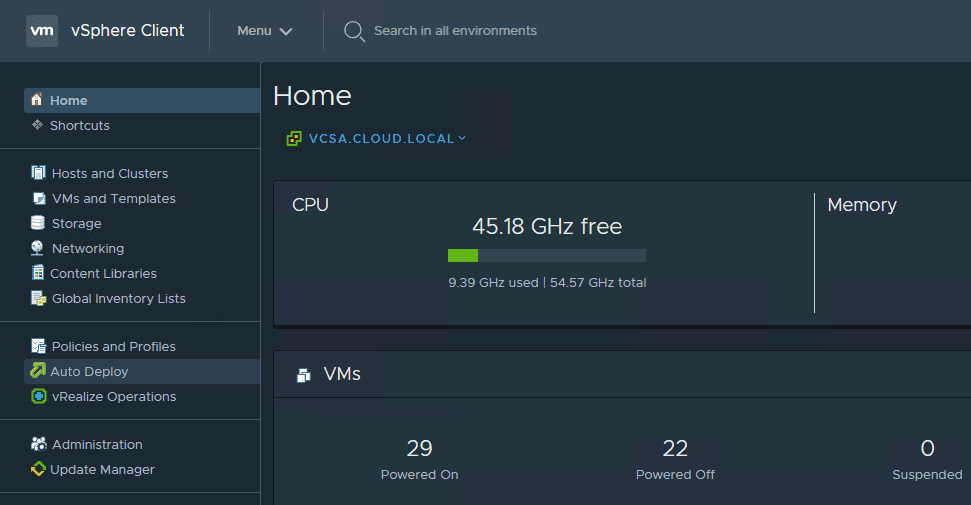

In vSphere 6.7, the vSphere Client (HTML5) has many new features and is close to being a fully functional client with all the capabilities of vSphere Web Client (Flex). The majority of the features required to manage vCenter Server operations are available in this version, including vSAN and initial support for vSphere Update Manager (VUM). For an up-to-date list of unsupported functionality, see Functionality Updates for the vSphere Client Guide.

vSphere 6.7 continues to offer the vSphere Web Client, which you can use for all advanced vCenter Server operations missing in the vSphere Client. However, VMware plans to deprecate the vSphere Web Client in future releases. For more information, see Goodbye, vSphere Web Client.

The VMware Host Client is a Web based application that you can use to manage individual ESXi hosts that are not connected to a vCenter Server system.

The vSphere 6.7 release is the final release for two sets of vSphere client APIs: the vSphere Web Client APIs (Flex) and the current set of vSphere Client APIs (HTML5), also known as the Bridge APIs. A new set of vSphere Client APIs are included as part of the vSphere 6.7 release. These new APIs are designed to scale and support the use cases and improved security, design, and extensibility of the vSphere Client.

VMware is deprecating webplatform.js, which will be replaced with an improved way to push updates into partner plugin solutions without any lifecycle dependencies on vSphere Client SDK updates.

Note: If you have an existing plugin solution to the vSphere Client, you must upgrade the Virgo server. Existing vSphere Client plugins will not be compatible with the vSphere 6.7 release unless you make this upgrade. See Upgrading Your Plug-in To Maintain Compatibility with vSphere Client SDK 6.7 for information on upgrading the Virgo server.

In vSphere 6.7, only TLS 1.2 is enabled by default. TLS 1.0 and TLS 1.1 are disabled by default. If you upgrade vCenter Server or Platform Services Controller to vSphere 6.7, and that vCenter Server instance or Platform Services Controller instance connects to ESXi hosts, other vCenter Server instances, or other services, you might encounter communication problems.

To resolve this issue, you can use the TLS Configurator utility to enable older versions of the protocol temporarily on vSphere 6.7 systems. You can then disable the older, less secure versions after all connections use TLS 1.2. For information, see Managing TLS Protocol Configuration with the TLS Configurator Utility in the vSphere 6.7 Documentation Set.

In the vSphere 6.7 release, vCenter Server does not support the TLS 1.2 connection for Oracle databases.

The vSphere 6.7 release is the final release that supports replacing VMCA issued solution user certificates with custom certificates through the UI. Renewing solution user certificates with VMCA issued certificates through the UI will continue to be supported in future releases.

The vSphere 6.7 release is the final release that requires customers to specify SSO sites. This descriptor is not required for any vSphere functionality and will be removed. Future vSphere releases will not include SSO Sites as a customer configurable item.

You cannot install VMware vCenter Server Appliance 6.7 on the original release of ESXi 6.0 with an IPv6 network. Instead, install vCenter Server Appliance on ESXi 6.0 Update 1a or later with an IPv6 network.

Upgrade and patch operations of virtual appliances is deprecated in Update Manager 6.7 release.

The Windows pre-Vista iso image for VMWare Tools is no longer packaged with the vSphere 6.7 release. The Windows pre-Vista iso image is available for download by users who require it. For download information, see VMWare Tools Downloads.

- The TFTP service included in the vCenter Server and Photon appliances is not supported by VMware. It is recommended to use this service only for testing and development purposes, and not in production environment. By using this service, you acknowledge and agree that you are using it solely at your own risk. VMware reserves the right to remove this service in future releases.

Known Issues

The known issues are grouped as follows.

Installation, Upgrade, and Migration Issues- ESXi installation or upgrade fail due to memory corruption on HPE ProLiant - DL380/360 Gen 9 Servers

The issue occurs on HPE ProLiant - DL380/360 Gen 9 Servers that have a Smart Array P440ar storage controller.

Workaround: Set the server BIOS mode to UEFI before you install or upgrade ESXi.

- After an ESXi upgrade to version 6.7 and a subsequent rollback to version 6.5 or earlier, you might experience failures with error messages

You might see failures and error messages when you perform one of the following on your ESXi host after reverting to 6.5 or earlier versions:

- Install patches and VIBs on the host

Error message: [DependencyError] VIB VMware_locker_tools-light requires esx-version >= 6.6.0 - Install or upgrade VMware Tools on VMs

Error message: Unable to install VMware Tools.

After the ESXi rollback from version 6.7, the new tools-light VIB does not revert to the earlier version. As a result, the VIB becomes incompatible with the rolled back ESXi host causing these issues.

Workaround: Perform the following to fix this problem.

SSH to the host and run one of these commands:

esxcli software vib install -v /path/to/tools-light.vib

or

esxcli software vib install -d /path/to/depot/zip -n tools-light

Where the vib and zip are of the currently running ESXi version.

Note: For VMs that already have new VMware Tools installed, you do not have to revert VMware Tools back when ESXi host is rolled back.

- Install patches and VIBs on the host

- Special characters backslash () or double-quote (') used in passwords causes installation pre-check to fail

If the special characters backslash () or double quote (') are used in ESXi, vCenter Single Sign-On, or operating system password fields during the vCenter Server Appliance Installation templates, the installation pre-check fails with the following error:

Error message: com.vmware.vcsa.installer.template.cli_argument_validation: Invalid escape: line ## column ## (char ###)Workaround: If you include special characters backslash () or double quote (') in the passwords for ESXi, operating systems, or Single-Sign-On, the special characters need to be escaped. For example, the password

passwordshould be escaped aspassword. - Windows vCenter Server 6.7 installer fails when non-ASCII characters are present in password

The Windows vCenter Server 6.7 installer fails when the Single Sign-on password contains non-ASCII characters for Chinese, Japanese, Korean, and Taiwanese locales.

Workaround: Ensure that the Single Sign-on password contains ASCII characters only for Chinese, Japanese, Korean, and Taiwanese locales.

- Cannot log in to vSphere Appliance Management Interface if the colon character (:) is part of vCenter Server root password

During the vCenter Server Appliance UI installation (Set up appliance VM page of Stage 1), if you include the colon character (:) as part of the vCenter Server root password, logging into the vSphere Appliance Management Interface (

https://vc_ip:5480) fails and you are unable to login. The password might be accepted by the password rule check during the setup, but login fails.Workaround: Do not use the colon character (:) to set the vCenter Server root password in the vCenter Server Appliance UI (Set up appliance VM of Stage 1).

- vCenter Server Appliance installation fails when the backslash character () is included in the vCenter Single Sign-On password

During the vCenter Server Appliance UI installation (SSO setup page of Stage 2), if you include the backslash character () as part of the vCenter Single Sign-On password, the installation fails with the error

Analytics Service registration with Component Manager failed. The password might be accepted by the password rule check, but installation fails.Workaround: Do not use the backslash character () to set the vCenter Single Sign-On password in the vCenter Server Appliance UI installer (SSO setup page of Stage 2)

- Scripted ESXi installation fails on HP ProLiant Gen 9 Servers with an error

When you perform a scripted ESXi installation on an HP ProLiant Gen 9 Server under the following conditions:

- The Embedded User Partition option is enabled in the BIOS.

- You use multiple USB drives during installation: one USB drive contains the ks.cfg file, and the others USB drive is not formatted and usable.

The installation fails with the error message Partitions not initialized.

Workaround:

- Disable the Embedded User Partition option in the server BIOS.

- Format the unformatted USB drive with a file system or unplug it from the server.

- Windows vCenter Server 6.0.x or 6.5.x upgrade to vCenter Server 6.7 fails if vCenter Server contains non-ASCII or high-ASCII named 5.5 host profiles

When a source Windows vCenter Server 6.0.x or 6.5.x contains vCenter Server 5.5.x host profiles named with non-ASCII or high-ASCII characters, UpgradeRunner fails to start during the upgrade pre-check process.

Workaround: Before upgrading Windows vCenter Server 6.0.x or 6.5.x to vCenter Server 6.7, upgrade the ESXi 5.5.x with the non-ASCII or high-ASCII named host profiles to ESXi 6.0.x or 6.5.x, then update the host profile from the upgraded host by clicking Copy setting from the hosts.

- You cannot run the camregister command with the -x option if the vCenter Single Sign-On password contains non-ASCII characters

When you run the

camregistercommand with the-xfile option, for example, to register the vSphere Authentication Proxy, the process fails with an access denied error when the vCenter Single Sign-On password contains non-ASCII characters.Workaround: Either set up the vCenter Single Sign-On password with ASCII characters, or use the

–ppassword option when you run thecamregistercommand to enter the vCenter Single Sign-On password that contains non-ASCII characters. - The Bash shell and SSH login are disabled after upgrading to vCenter Server 6.7

After upgrading to vCenter Server 6.7, you are not able to access the vCenter Server Appliance using either the Bash shell or SSH login.

Workaround:

- After successfully upgrading to vCenter Server 6.7, log in to the vCenter Server Appliance Management Interface. In a Web browser, go to: https://appliance_ip_address_or_fqdn:5480

- Log in as root.

The default root password is the password you set while deploying the vCenter Server Appliance. - Click Access, and click Edit.

- Edit the access settings for the Bash shell and SSH login.

When enabling Bash shell access to the vCenter Server Appliance, enter the number of minutes to keep access enabled. - Click OK to save the settings.

- Management node migration is blocked if vCenter Server for Windows 6.0 is installed on Windows Server 2008 R2 without previously enabling Transport Layer Security 1.2

This issue occurs if you are migrating vCenter Server for Windows 6.0 using an external Platform Services Controller (an MxN topology) on Windows Server 2008 R2. After migrating the external Platform Services Controller (PSC), when you run Migration Assistant on the Management node it fails, reporting that it cannot retrieve the PSC version. This error occurs because Windows Server 2008 R2 does not support Transport Layer Security (TLS) 1.2 by default, which is the default TLS protocol for Platform Services Controller 6.7.

Workaround: Enable TLS 1.2 for Windows Server 2008 R2.1.

- Navigate to the registry key:

HKEY_LOCAL_MACHINESYSTEMCurrentControlSetControlSecurityProvidersSCHANNELProtocols - Create a new folder and label it

TLS 1.2. - Create two new keys with the

TLS 1.2folder, and name the keys Client and Server. - Under the Client key, create two DWORD (32-bit) values, and name them DisabledByDefault and Enabled.

- Under the Server key, create two DWORD (32-bit) values, and name them DisabledByDefault and Enabled.

- Ensure that the Value field is set to 0 and that the Base is Hexadecimal for DisabledByDefault.

- Ensure that the Value field is set to 1 and that the Base is Hexadecimal for Enabled.

- Reboot the Windows Server 2008 R2 computer.

For more information on using TLS 1.2 with Windows Server 2008 R2, refer to the operating system vendor's documentation.

- Navigate to the registry key:

- vCenter Server containing host profiles with version less than 6.0 fails during upgrade to version 6.7

vCenter Server 6.7 does not support host profiles with version less than 6.0. To upgrade to vCenter Server 6.7, you must first upgrade the host profiles to version 6.0 or later, if you have any of the following components:

- ESXi host(s) version - 5.1 or 5.5

- vCenter server version - 6.0 or 6.5

- Host profiles version - 5.1 or 5.5

Workaround: See KB 52932

- After upgrading to vCenter Server 6.7, any edits to the ESXi host's /etc/ssh/sshd_config file are discarded, and the file is restored to the vCenter Server 6.7 default configuration

Due to changes in the default values in the

/etc/ssh/sshd_configfile, the vCenter Server 6.7 upgrade replaces any manual edits to this configuration file with the default configuration. This change was necessary as some prior settings (for example, permitted ciphers) are no longer compatible with current ESXi behavior, and prevented SSHD (SSH daemon) from starting correctly.CAUTION: Editing

/etc/ssh/sshd_configis not recommended. SSHD is disabled by default, and the preferred method for editing the system configuration is through the VIM API (including the ESXi Host Client interface) or ESXCLI.Workaround: If edits to

/etc/ssh/sshd_configare needed, you can apply them after successfully completing the vCenter Server 6.7 upgrade. The default configuration file now contains a version number. Preserve the version number to avoid overwriting the file.For further information on editing the

/etc/ssh/sshd_configfile, see the following Knowledge Base articles:- For information on enabling public/private key authentication, see Knowledge Base article KB 1002866

- For information on changing the default SSHD configuration, see Knowledge Base article KB 1020530

- Virtualization Based Security (VBS) on vSphere in Windows Guest OSs RS1, RS2 and RS3 require HyperV to be enabled in the Guest OS.

Virtualization Based Security (VBS) on vSphere in Windows Guest OSs RS1, RS2 and RS3 require HyperV to be enabled in the Guest OS.

Workaround: Enable Hyper-V Platform on Windows Server 2016. In the Server Manager, under Local Server select Manage -> Add Roles and Features Wizard and under Role-based or feature-based installation select Hyper-V from the server pool and specify the server roles. Choose defaults for Server Roles, Features, Hyper-V, Virtual Switches, Migration and Default Stores. Reboot the host.

Enable Hyper-V on Windows 10: Browse to Control Panel -> Programs -> Turn Windows features on or off. Check the Hyper-V Platform which includes the Hyper-V Hypervisor and Hyper-V Services. Uncheck Hyper-V Management Tools. Click OK. Reboot the host.

- Hostprofile PeerDNS flags do not work in some scenarios

If PeerDNS for IPv4 is enabled for a vmknic on a stateless host that has an associated host profile, the iPv6PeerDNS might appear with a different state in the extracted host profile after the host reboots.

Workaround: None.

- When you upgrade vSphere Distributed Switches to version 6.6, you might encounter a few known issues

During upgrade, the connected virtual machines might experience packet loss for a few seconds.

Workaround: If you have multiple vSphere Distributed Switches that need to be upgraded to version 6.6, upgrade the switches sequentially.

Schedule the upgrade of vSphere Distributed Switches during a maintenance window, set DRS mode to manual, and do not apply DRS recommendations for the duration of the upgrade.

For more details about known issues and solutions, see KB 52621

- VM fails to power on when Network I/O Control is enabled and all active uplinks are down

A VM fails to power on when Network I/O Control is enabled and the following conditions are met:

- The VM is connected to a distributed port group on a vSphere distributed switch

- The VM is configured with bandwidth allocation reservation and the VM's network adapter (vNIC) has a reservation configured

- The distributed port group teaming policy is set to Failover

- All active uplinks on the distributed switch are down. In this case, vSphere DRS cannot use the standby uplinks and the VM fails to power on.

Workaround: Move the available standby adapters to the active adapters list in the teaming policy of the distributed port group.

- Network flapping on a NIC that uses qfle3f driver might cause ESXi host to crash

The qfle3f driver might cause the ESXi host to crash (PSOD) when the physical NIC that uses the qfle3f driver experiences frequent link status flapping every 1-2 seconds.

Workaround: Make sure that network flapping does not occur. If the link status flapping interval is more than 10 seconds, the qfle3f driver does not cause ESXi to crash. For more information, see KB 2008093.

- Port Mirror traffic packets of ERSPAN Type III fail to be recognized by packet analyzers

A wrong bit that is incorrectly introduced in ERSPAN Type III packet header causes all ERSPAN Type III packets to appear corrupt in packet analyzers.

Workaround: Use GRE or ERSPAN Type II packets, if your traffic analyzer supports these types.

- DNS configuration esxcli commands are not supported on non-default TCP/IP stacks

DNS configuration of non-default TCP/IP stacks is not supported. Commands such as

esxcli network ip dns server add -N vmotion -s 10.11.12.13do not work.Workaround: Do not use DNS configuration esxcli commands on non-default TCP/IP stacks.

- Compliance check fails with an error when applying a host profile with enabled default IPv4 gateway for vmknic interface

When applying a host profile with enabled default IPv4 gateway for vmknic interface, the setting is populated with '0.0.0.0' and does not match the host info, resulting with the following error:

IPv4 vmknic gateway configuration doesn't match the specificationWorkaround:

- Edit the host profile settings.

- Navigate to Networking configuration > Host virtual nic or Host portgroup > (name of the vSphere Distributed Switch or name of portgroup) > IP address settings.

- From the Default gateway Vmkernal Network Adapter (IPv4) drop-down menu, select Choose a default IPv4 gateway for the vmknic and enter the Vmknic Default IPv4 gateway.

- Intel Fortville series NICs cannot receive Geneve encapsulation packets with option length bigger than 255 bytes

If you configure Geneve encapsulation with option length bigger than 255 bytes, the packets are not received correctly on Intel Fortville NICs X710, XL710, and XXV710.

Workaround: Disable hardware VLAN stripping on these NICs by running the following command:

esxcli network nic software set --untagging=1 -n vmnicX. - RSPAN_SRC mirror session fails after migration

When a VM connected to a port assigned for RSPAN_SRC mirror session is migrated to another host, and there is no required pNic on the destination network of the destination host, then the RSPAN_SRC mirror session fails to configure on the port. This causes the port connection to fail failure but the vMotion migration process succeeds.

Workaround: To restore port connection failure, complete either one of the following:

- Remove the failed port and add a new port.

- Disable the port and enable it.

The mirror session fails to configure, but the port connection is restored.

- NFS datastores intermittently become read-only

A host's NFS datastores may become read-only when the NFS vmknic temporarily loses its IP address or after a stateless hosts reboot.

Workaround: You can unmount and remount the datastores to regain connectivity through the NFS vmknic. You can also set the NFS datastore write permission to both the IP address of the NFS vmknic and the IP address of the Management vmknic.

- When editing a VM's storage policies, selecting Host-local PMem Storage Policy fails with an error

In the Edit VM Storage Policies dialog, if you select Host-local PMem Storage Policy from the dropdown menu and click OK, the task fails with one of these errors:

The operation is not supported on the object.or

Incompatible device backing specified for device '0'DetailedWorkaround: You cannot apply the Host-local PMem Storage Policy to VM home. For a virtual disk, you can use the migration wizard to migrate the virtual disk and apply the Host-local PMem Storage Policy.

- Datastores might appear as inaccessible after ESXi hosts in a cluster recover from a permanent device loss state

This issue might occur in the environment where the hosts in the cluster share a large number of datastore, for example, 512 to 1000 datastores.

After the hosts in the cluster recover from the permanent device loss condition, the datastores are mounted successfully at the host level. However, in vCenter Server, several datastores might continue to appear as inaccessible for a number of hosts.Workaround: On the hosts that show inaccessible datastores in the vCenter Server view, perform the Rescan Storage operation from vCenter Server.

- Migration of a virtual machine from a VMFS3 datastore to VMFS5 fails in a mixed ESXi 6.5 and 6.7 host environment

If you have a mixed host environment, you cannot migrate a virtual machine from a VMFS3 datastore connected to an ESXi 6.5 host to a VMFS5 datastore on an ESXi 6.7 host.

Workaround: Upgrade the VMFS3 datastore to VMFS5 to be able to migrate the VM to the ESXi 6.7 host.

- Warning message about a VMFS3 datastore remains unchanged after you upgrade the VMFS3 datastore using the CLI

Typically, you use the CLI to upgrade the VMFS3 datastore that failed to upgrade during an ESXi upgrade. The VMFS3 datastore might fail to upgrade due to several reasons including the following:

- No space is available on the VMFS3 datastore.

- One of the extents on the spanned datastore is offline.

After you fix the reason of the failure and upgrade the VMFS3 datastore to VMFS5 using the CLI, the host continues to detect the VMFS3 datastore and reports the following error:

Deprecated VMFS (ver 3) volumes found. Upgrading such volumes to VMFS (ver5) is mandatory for continued availability on vSphere 6.7 host.

Workaround: To remove the error message, restart hostd using the /etc/init.d/hostd restart command or reboot the host.

- The Mellanox ConnectX-4/ConnectX-5 native ESXi driver might exhibit performance degradation when its Default Queue Receive Side Scaling (DRSS) feature is turned on

Receive Side Scaling (RSS) technology distributes incoming network traffic across several hardware-based receive queues, allowing inbound traffic to be processed by multiple CPUs. In Default Queue Receive Side Scaling (DRSS) mode, the entire device is in RSS mode. The driver presents a single logical queue to OS and is backed by several hardware queues.

The native nmlx5_core driver for the Mellanox ConnectX-4 and ConnectX-5 adapter cards enables the DRSS functionality by default. While DRSS helps to improve performance for many workloads, it could lead to possible performance degradation with certain multi-VM and multi-vCPU workloads.

Workaround: If significant performance degradation is observed, you can disable the DRSS functionality.

- Run the esxcli system module parameters set -m nmlx5_core -p DRSS=0 RSS=0 command.

- Reboot the host.

- Datastore name does not extract to the Coredump File setting in the host profile

When you extract a host profile, the Datastore name field is empty in the Coredump File setting of the host profile. Issue appears when using esxcli command to set coredump.

Workaround:

- Extract a host profile from an ESXi host.

- Edit the host profile settings and navigate to General System Settings > Core Dump Configuration > Coredump File.

- Select Create the Coredump file with an explicit datastore and size option and enter the Datastore name, where you want the Coredump File to reside.

- Native software FCoE adapters configured on an ESXi host might disappear when the host is rebooted

After you successfully enable the native software FCoE adapter (vmhba) supported by the vmkfcoe driver and then reboot the host, the adapter might disappear from the list of adapters. This might occur when you use Cavium QLogic 57810 or QLogic 57840 CNAs supported by the qfle3 driver.

Workaround: To recover the vmkfcoe adapter, perform these steps:

- Run the esxcli storage core adapter list command to make sure that the adapter is missing from the list.

- Verify the vSwitch configuration on vmnic associated with the missing FCoE adapter.

- Run the following command to discover the FCoE vmhba:

- On a fabric setup:

#esxcli fcoe nic discover -n vmnic_number - On a VN2VN setup:

#esxcli fcoe nic discover -n vmnic_number

- On a fabric setup:

- Attempts to create a VMFS datastore on an ESXi 6.7 host might fail in certain software FCoE environments

Your attempts to create the VMFS datastore fail if you use the following configuration:

- Native software FCoE adapters configured on an ESXi 6.7 host.

- Cavium QLogic 57810 or 57840 CNAs.

- Cisco FCoE switch connected directly to an FCoE port on a storage array from the Dell EMC VNX5300 or VNX5700 series.

Workaround: None.

As an alternative, you can switch to the following end-to-end configuration:

ESXi host > Cisco FCoE switch > FC switch > storage array from the DELL EMC VNX5300 and VNX5700 series.

- Windows Explorer displays some backups with unicode differently from how browsers and file system paths show them

Some backups containing unicode display differently in the Windows Explorer file system folder than they do in browsers and file system paths.

Workaround: Using http, https, or ftp, you can browse backups with your web browser instead of going to the storage folder locations through Windows Explorer.

- The time synchronization mode setting is not retained when upgrading vCenter Server Appliance

If NTP time synchronization is disabled on a source vCenter Server Appliance, and you perform an upgrade to vCenter Server Appliance 6.7, after the upgrade has successfully completed NTP time synchronization will be enabled on the newly upgraded appliance.

Workaround:

- After successfully upgrading to vCenter Server Appliance 6.7, log into the vCenter Server Appliance Management Interface as root.

The default root password is the password you set while deploying the vCenter Server Appliance.

- In the vCenter Server Appliance Management Interface, click Time.

- In the Time Synchronization pane, click Edit.

- From the Mode drop-down menu, select Disabled.

The newly upgraded vCenter Server Appliance 6.7 will no longer use NTP time synchronization, and will instead use the system time zone settings.

- After successfully upgrading to vCenter Server Appliance 6.7, log into the vCenter Server Appliance Management Interface as root.

- Login to vSphere Web Client with Windows session authentication fails on Firefox browsers of version 54 or later

If you use Firefox of version 54 or later to log in to the vSphere Web Client, and you use your Windows session for authentication, the VMware Enhanced Authentication Plugin might fail to populate your user name and to log you in.

Workaround: If you are using Windows session authentication to log in to the vSphere Web Client, use one of the following browsers: Internet Explorer, Chrome, or Firefox of version 53 and earlier.

- vCenter hardware health alarm notifications are not triggered in some instances

When multiple sensors in the same category on an ESXi host are tripped within a time span of less than five minutes, traps are not received and email notifications are not sent.

Workaround: None. You can check the hardware sensors section for any alerts.

- When using the VCSA Installer Time Sync option, you must connect the target ESX to the NTP server in the Time & Date Setting from the ESX Management

If you want to select Time Sync with NTP server from the VCSA Installer->Stage2->Appliance configuration->Time Sync option (ESX/NTP server), you also need to have the target ESX already connected to NTP server in the Time&Date Setting from the ESX Management, otherwise it'll fail in installation.

Workaround:

- Set the Time Sync option in stage2->Appliance configuration to sync with ESX

- Set the Time Sync option in stage2->Appliance configuration to sync with NTP Servers, make sure both the ESX and VC are set to connect to NTP servers.

- When you monitor Windows vCenter Server health, an error message appears

Health service is not available for Windows vCenter Server. If you select the vCenter Server, and click Monitor > Health, an error message appears:

Unable to query vSAN health information. Check vSphere Client logs for details.This problem can occur after you upgrade the Windows vCenter Server from release 6.0 Update 1 or 6.0 Update 2 to release 6.7. You can ignore this message.

Workaround: None. Users can access vSAN health information through the vCenter Server Appliance.

- vCenter hardware health alarms do not function with earlier ESXi versions

If ESXi version 6.5 Update 1 or earlier is added to vCenter 6.7, hardware health related alarms will not be generated when hardware events occur such as high CPU temperatures, FAN failures, and voltage fluctuations.

Workaround: None.

- vCenter Server stops working in some cases when using vmodl to edit or expand a disk

When you configure a VM disk in a Storage DRS-enabled cluster using the latest vmodl, vCenter Server stops working. A previous workaround using an earlier vmodl no longer works and will also cause vCenter Server to stop working.

Workaround: None

- vCenter Server for Windows migration to vCenter Server Appliance fails with error

When you migrate vCenter Server for Windows 6.0.x or 6.5.x to vCenter Server Appliance 6.7, the migration might fail during the data export stage with the error:

The compressed zip folder is invalid or corrupted.Workaround: You must zip the data export folder manually and follow these steps:

- In the source system, create an environment variable MA_INTERACTIVE_MODE.

- Go to Computer > Properties > Advanced system settings > Environment Variables > System Variables > New.

- Enter 'MA_INTERACTIVE_MODE' as variable name with value 0 or 1.

- Start the VMware Migration Assistant and provide your password.

- Start the Migration from the client machine. The migration will pause, and the Migration Assistant console will display the message

To continue the migration, create the export.zip file manually from the export data (include export folder). - NOTE: Do not press any keys or tabs on the Migration Assistant console.

- Go to the

%appdata%vmwaremigration-assistantfolder. - Delete the export.zip created by the Migration Assistant.

- To continue the migration, manually create the export.zip file from the export folder.

- Return to the Migration Assistant console. Type

Yand press Enter.

- Discrepancy between the build number in VAMI and the build number in the vSphere Client

In vSphere 6.7, the VAMI summary tab displays the ISO build for the vCenter Server and vCenter Server Appliance products. The vSphere Client summary tab displays the build for the vCenter product, which is a component within the vCenter Server product.

Workaround: None

- vCenter Server Appliance 6.7 displays an error message in the Available Update section of the vCenter Server Appliance Management Interface (VAMI)

The Available Update section of the vCenter Server Appliance Management Interface (VAMI) displays the following error message:

Check the URL and try again.This message is generated when the vCenter Server Appliance searches for and fails to find a patch or update. No functionality is impacted by this issue. This issue will be resolved with the release of the first patch for vSphere 6.7.

Workaround: None. No functionality is impacted by this issue.

- Name of the virtual machine in the inventory changes to its path name

This issue might occur when a datastore where the VM resides enters the All Paths Down state and becomes inaccessible. When hostd is loading or reloading VM state, it is unable to read the VM's name and returns the VM path instead. For example, /vmfs/volumes/123456xxxxxxcc/cs-00.111.222.333.

Workaround: After you resolve the storage issue, the virtual machine reloads, and its name is displayed again.

- You must select the 'Secure boot' Platform Security Level when enabling VBS in a Guest OS on AMD systems

On AMD systems, vSphere virtual machines do not provide a vIOMMU. Since vIOMMU is required for DMA protection, AMD users cannot select 'Secure Boot and DMA protection' in the Windows Group Policy Editor when they 'Turn on Virtualization Based Security'. Instead select 'Secure boot.' If you select the wrong option it will cause VBS services to be silently disabled by Windows.

Workaround: Select 'Secure boot' Platform Security Level in a Guest OS on AMD systems.

- You cannot hot add memory and CPU for Windows VMs when Virtualization Based Security (VBS) is enabled within Windows

Virtualization Based Security (VBS) is a new feature introduced in Windows 10 and Windows Server 2016. vSphere supports running Windows with VBS enabled starting in the vSphere 6.7 release. However, Hot add of memory and CPU will not operate for Windows VMs when Virtualization Based Security (VBS) is enabled.

Workaround: Power-off the VM, change memory or CPU settings and power-on the VM.

- Snapshot tree of a linked-clone VM might be incomplete after a vSAN network recovery from a failure

A vSAN network failure might impact accessibility of vSAN objects and VMs. After a network recovery, the vSAN objects regain accessibility. The hostd service reloads the VM state from storage to recover VMs. However, for a linked-clone VM, hostd might not detect that the parent VM namespace has recovered its accessibility. This results in the VM remaining in inaccessible state and VM snapshot information not being displayed in vCenter Server.

Workaround: Unregister the VM, then re-register it to force the hostd to reload the VM state. Snapshot information will be loaded from storage.

- An OVF Virtual Appliance fails to start in the vSphere Client

The vSphere Client does not support selecting vService extensions in the Deploy OVF Template wizard. As a result, if an OVF virtual appliance uses vService extensions and you use the vSphere Client to deploy the OVF file, the deployment succeeds, but the virtual appliance fails to start.

Workaround: Use the vSphere Web Client to deploy OVF virtual appliances that use vService extensions.

- When you configure Proactive HA in Manual/MixedMode in vSphere 6.7 RC build you are prompted twice to apply DRS recommendations

When you configure Proactive HA in Manual/MixedMode in vSphere 6.7 RC build and a red health update is sent from the Proactive HA provider plug-in, you are prompted twice to apply the recommendations under Cluster -> Monitor -> vSphere DRS -> Recommendations. The first prompt is to enter the host into maintenance mode. The second prompt is to migrate all VMs on a host entering maintenance mode. In vSphere 6.5, these two steps are presented as a single recommendation for entering maintenance mode, which lists all VMs to be migrated.

Workaround: There is no impact to work flow or results. You must apply the recommendations twice. If you are using automated scripts, you must modify the scripts to include the additional step.

- Lazy import upgrade interaction when VCHA is not configured

The VCHA feature is available as part of 6.5 release. As of 6.5, a VCHA cluster cannot be upgraded while preserving the VCHA configuration. The recommended approach for upgrade is to first remove the VCHA configuration either through vSphere Client or by calling a destroy VCHA API. So for lazy import upgrade workflow without VCHA configuration, there is no interaction with VCHA.

Do not configure a fresh VCHA setup while lazy import is in progress. The VCHA setup requires cloning the Active VM as Passive/Witness VM. As a result of an ongoing lazy import, the amount of data that needs to be cloned is large and may lead to performance issues.

Workaround: None.

- Reboot of an ESXi stateless host resets the numRxQueue value of the host

When an ESXi host provisioned with vSphere Auto Deploy reboots, it loses the previously set numRxQueue value. The Host Profiles feature does not support saving the numRxQueue value after the host reboots.

Workaround: After the ESXi stateless host reboots:

- Remove the vmknic from the host.

- Create a vmknic on the host with the expected numRxQueue value.

- After caching on a drive, if the server is in the UEFI mode, a boot from cache does not succeed unless you explicitly select the device to boot from the UEFI boot manager

In case of Stateless Caching, after the ESXi image is cached on a 512n, 512e, USB, or 4Kn target disk, the ESXi stateless boot from autodeploy might fail on a system reboot. This occurs if autodeploy service is down.

The system attempts to search for the cached ESXi image on the disk, next in the boot order. If the ESXi cached image is found, the host is booted from it. In legacy BIOS, this feature works without problems. However, in the UEFI mode of the BIOS, the next device with the cached image might not be found. As a result, the host cannot boot from the image even if the image is present on the disk.

Workaround: If autodeploy service is down, on the system reboot, manually select the disk with the cached image from the UEFI Boot Manager.

- A stateless ESXi host boot time might take 20 minutes or more

The booting of a stateless ESXi host with 1,000 configured datastores might require 20 minutes or more.

Workaround: None.

- ESXi might fail during reboot with VMs running on the iSCSI LUNs claimed by the qfle3i driver

ESXi might fail during reboot with VMs running on the iSCSI LUNs claimed by the qfle3i driver if you attempt to reboot the server with VMs in the running I/O state.

Workaround: First power off VMs and then reboot the ESXi host.

- VXLAN stateless hardware offloads are not supported with Guest OS TCP traffic over IPv6 on UCS VIC 13xx adapters

You may experience issues with VXLAN encapsulated TCP traffic over IPv6 on Cisco UCS VIC 13xx adapters configured to use the VXLAN stateless hardware offload feature. For VXLAN deployments involving Guest OS TCP traffic over IPV6, TCP packets subject to TSO are not processed correctly by the Cisco UCS VIC 13xx adapters, which causes traffic disruption. The stateless offloads are not performed correctly. From a TCP protocol standpoint this may cause incorrect packet checksums being reported to the ESXi software stack, which may lead to incorrect TCP protocol processing in the Guest OS.

Workaround: To resolve this issue, disable the VXLAN stateless offload feature on the Cisco UCS VIC 13xx adapters for VXLAN encapsulated TCP traffic over IPV6. To disable the VXLAN stateless offload feature in UCS Manager, disable the

Virtual Extensible LANfield in the Ethernet Adapter Policy. To disable the VXLAN stateless offload feature in the CIMC of a Cisco C-Series UCS server, uncheckEnable VXLANfield in the Ethernet Interfaces vNIC properties section. - Significant time might be required to list a large number of unresolved VMFS volumes using the batch QueryUnresolvedVmfsVolume API

ESXi provides the batch QueryUnresolvedVmfsVolume API, so that you can query and list unresolved VMFS volumes or LUN snapshots. You can then use other batch APIs to perform operations, such as resignaturing specific unresolved VMFS volumes. By default, when the API QueryUnresolvedVmfsVolume is invoked on a host, the system performs an additional filesystem liveness check for all unresolved volumes that are found. The liveness check detects whether the specified LUN is mounted on other hosts, whether an active VMFS heartbeat is in progress, or if there is any filesystem activity. This operation is time consuming and requires at least 16 seconds per LUN. As a result, when your environment has a large number of snapshot LUNs, the query and listing operation might take significant time.

Workaround: To decrease the time of the query operation, you can disable the filesystem liveness check.

- Log in to your host as root.

- Open the configuration file for hostd using a text editor. The configuration file is located in /etc/vmware/hostd/config.xml under plugins/hostsvc/storage node.

- Add the checkLiveFSUnresolvedVolume parameter and set its value to FALSE. Use the following syntax:

<checkLiveFSUnresolvedVolume>FALSE</checkLiveFSUnresolvedVolume>

As an alternative, you can set the ESXi Advanced option VMFS.UnresolvedVolumeLiveCheck to FALSE in the vSphere Client.

- Compliance check fails with an error for the UserVars.ESXiVPsDisabledProtocols option when an ESXi host upgraded to version 6.7 is attached to a host profile with version 6.0

Issue occurs when you perform the following actions:

- Extract a host profile from an ESXi host with version 6.0.

- Upgrade the ESXi host to version 6.7.

- The host appears as non-compliant for UserVars.ESXiVPsDisabledProtocols option even after remediation.

Workaround:

- Extract a new host profile from the upgraded ESXi host and attach the host to the profile.

- Upgrade the host profile by using the Copy Settings from Host from the upgraded ESXi host.

- After upgrade to ESXi 6.7, networking workloads on Intel 10GbE NICs cause higher CPU utilization

If you run certain types of networking workloads on an upgraded ESXi 6.7 host, you might see a higher CPU utilization under the following conditions:

- The NICs on the ESXi host are from the Intel 82599EB or X540 families

- The workload involves multiple VMs that run simultaneously and each VM is configured with multiple vCPUs

- Before the upgrade to ESXi 6.7, the VMKLinux ixgbe driver was used

Workaround: Revert to the legacy VMKLinux ixgbe driver:

- Connect to the ESXi host and run the following command:

# esxcli system module set -e false -m ixgben - Reboot the host.

Note: The legacy VMKLinux ixgbe inbox driver version 3.7.x does not support Intel X550 NICs. Use the VMKLinux ixgbe async driver version 4.x with Intel X550 NICs.

- Unable to unstage patches when using an external Platform Services Controller

If you are patching an external Platform Services Controller (an MxN topology) using the VMWare Appliance Management Interface with patches staged to an update repository, and then attempt to unstage the patches, the following error message is reported:

Error in method invocation [Errno 2] No such file or directory: '/storage/core/software-update/stage'Workaround:

- Access the appliance shell and log in as a user who has a super administrator role.

- Run the command

software-packages unstageto unstage the staged patches. All directories and files generated by the staging process are removed. - Refresh the VMware Appliance Management Interface, which will now report the patches as being removed.

- Initial install of DELL CIM VIB might fail to respond

After you install a third-party CIM VIB it might fail to respond.

Workaround: To fix this issue, enter the following two commands to restart sfcbd:

esxcli system wbem set --enable falseesxcli system wbem set --enable true

vSphere 6.0 has been released with great new features and enhancements. One of the biggest rumors before the release of vSphere 6.0 was VMware is going to stop releasing the vSphere c# client. No it is not true. With vSphere 6.0, VMware has shipped vSphere C# client with vCenter Server installer.However, all new features from vSphere 5.1 onwards are available only in vSphere web client. For troubleshooting purposes, VMware has added read only support to the vSphere C# Client for compatibility levels 5.1, 5.5 and 6 aka virtual hardware 9, 10 and 11 features. This allows you to edit settings available in compatibility level 5 aka vHW8 and have access to view vHW9+ settings. The use case for this would be to connect directly to a host to add CPU or RAM to your powered off vCenter Server.

As We already discussed, Virtual machine hardware features are limited to hardware version 8 and earlier in the vSphere Client 6.0. All the features introduced in vSphere 5.5 and beyond are available only through the vSphere Web client. The traditional vSphere client will continue to Operate ,supporting the same feature set as vSphere 5.0.

The following hardware version 9 to 11 features are read-only and unavailable to edit with vSphere Client 6.0. You need to use vSphere Web Client to edit the following features.

- SATA controller and hardware settings

- SR-IOV

- GPU 3D render and memory settings

- Tuning latency

- vFlash settings

- Nested HV

- vCPU ref counters

- Scheduled HW upgrade

I am sure vSphere Client availability with vSphere 6.0 will be happy news for all the VMware administrators. I hope this is informative for you. Thanks for Reading!!!. Be Social and share it in social media, if you feel worth sharing it.